Things are changing so rapidly in mobile hardware that we are starting to enter an era where no longer will there a difference in feature sets or capabilities between what your phone can do, and what a high end PC can do. The difference will simply be how much power the chips are allowed to consume.

Now, I don't want to sound like I am just blindly pushing our own chips here, but I believe Tegra K1 is an early glimpse of things to come, and probably not just from us, I expect the rest of the industry to follow eventually until developers can safely assume a relatively consistent feature set from PC down to mobile. K1 is just the first example, because the GPU core in it is Kepler. And that is not just some marketing trickery, its actually same microarchitecture that powers crazy things like the GTX780. Over the last few years a lot of blood, sweat and tears went into making this possible. And while due to size, power and thermal constraints it only has 192 "cores" instead of 2304, it still smokes the competition perf wise, but more importantly its just in a completely different league when it comes to features as it is capable of full desktop class OpenGL 4.4, including geometry shaders, tessellation, compute, etc, plus extensions enabling things like bindless!

Here is where I present lessons learned, bits of code and rants related to technology.

Saturday, June 28, 2014

Friday, June 27, 2014

Tegra Glass Fracture Demo

After that demo I started fiddling around with the idea of bringing it to Tegra. I actually rewrote everything with a keen eye on performance. Starting with Tegra 3 I was able to get the refraction/reflection actually working at around 30fps. Tegra 4 allowed me to hit 60fps and turn the shadows on (using a little mip-chain hack to achieve the penumbra). K1 though allows me to blow all that out of the water and bring the full experience of the PC version to mobile using desktop class OpenGL 4.4 feature set. And Nathan Reed took my crazy geometry shader for doing the caustics and added the even crazier use of the tessellator to make the caustics truly mind blowing and better than the original PC version.

Anyways, the Tegra version of this demo didn't get a lot of attention, but we have shown it at our booth at a few trade shows, so figured it was time for the interwebs to get a taste.

Continue reading for high resolution screenshots...

Friday, June 20, 2014

Screen Space Surface Generation circa 2005

When working on a cheesy little demo with John Ratcliff called "ageia carwash" I decided to try an idea that was bouncing around my head which was to render the particles as sprite that looked like the normals of a sphere, blur it a bit, then discard the post-blurred alpha below a particular threshold, apply some lighting and fake refraction in an attempt to create a GPU friendly real-time liquid surface. Personally I thought this first attempt was pretty good, but I couldn't convince everyone higher up the food chain that it was a reasonable compromise, but I was just a kid bad then and didn't push for the idea hard enough I guess :/

Luckily Simon Green independently had the same idea at NVIDIA and has over time turned it into the goto method for rendering particle based liquids in real-time.

Also around this time Matthias Müller, Simon Schirm and Stephan Duthaler had another novel technique called Screen Space Meshes (published in 2007, but I think they had this working much earlier than that), which did marching squares in 2D and then projected that back out into 3D. It was fast enough for real-time and could be run efficiently on our own hardware so thats the direction we went for a while, even ended up in a few games I think. But of course, eventually we ended up back at Simon's solution by the time acquisition came around.

Anyways, this video was captured early in 2005 before we even added lighting in the demo and even featured our old logo.

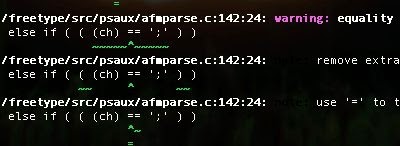

Enabling color output with ccache+clang

Typically when you compile with clang you get some pretty sexy color coded error messages (as seen above). This is great and all, but if you are running through ccache (which is awesome btw), then you will notice that you lose the hot color coding, which will probably make you sad. The problem isn't ccache stripping out the color coding exactly, its that clang is detecting that its not printing to a terminal that supports color (because its going through the ccache process which knows nothing about color).

The fix is quite easy though, just pass "-fcolor-diagnostics" on the command-line to clang like so...

$(CCACHE) $(CLANG) -fcolor-diagnostics...but what if you want to run your build through some sort of automated build system that then forwards the log to people via email or pipe it through some other system that doesn't understand color? Well I got a solution for that as well, just have your makefile check for color!

COLOR_DIAGNOSTICS = $(shell if [ `tput colors` -ge 8 ]; then echo -fcolor-diagnostics; fi)In this case I check for a terminal that supports at least 8 colors.

$(CCACHE) $(CLANG) $(COLOR_DIAGNOSTICS)

Texture-Space Particle Fluid Dynamics

It was fairly simple to implement, everything was in 2D so I used a world-space normalmap to compute which direction was up for each particle, and sampled a tangent-space normalmap to apply variable friction and modulate the staining function.

This never got beyond weekend experiment status, but I did capture this video at some point to prove the concept kind of worked. Next steps would have been moving particles back and forth between 2D simulation and 3D simulation (so you could say poor water on the floor) and handle UV wrapping.

Thursday, June 19, 2014

The story of "Killdozer"/"Flank"

Then around 4 or 5 years ago Chris Lamb, Dane Johnston, and I were working on trying to make an RTS that would map equally as well on PC/Mac and Tablets in our spare time. Fundamentally that meant a Myth style RTS (no building, just combat), and a click-and-drag or touch-and-drag camera, and context aware interaction with units/items. But we eventually gave up on that particular project because our day jobs didn't allow enough time to ever really complete the vision so we moved onto something a bit simpler. We had only gotten to some basic prototyping phase of development before moving on, but I came across some video captures and thought it was time to share them. But while I didn't fully realize the "killdozer" dream before killing the project, that was where I was headed.

With that said there was some pretty cool tech involved considering our target was iPad (although these videos were captured on PC).

It used an engine that I have been fiddling with for the last 10 years or so as a hobby, never had really used it for much successfully though (never enough time to ship a game!), but because of that it actually had fairly mature tools and supported a wide range of platforms. I was also always totally obsessive about CPU performance so when mobile came around it really handled the transition quite easily.

A layered terrain system that was deformable. This basically worked by layering different heightfields on top of each other each with different properties. Sand on top of dirt on top of bedrock, etc. This allowed the user to deform the level and change gameplay, but also allowed the level designer to place hard limits and obstacles in the environment.

But probably the coolest thing was a GPU (using pixel shaders) volume conserving shallow water simulation. The idea was we wanted to plan for some cool events in the game like breaking dams to change the layout of the map in various ways during gameplay. Eventually my plan was to extend this simulation to the terrain itself, but never got around to it.

Subscribe to:

Comments (Atom)